A Comprehensive Guide to AI Security

What is AI Security?

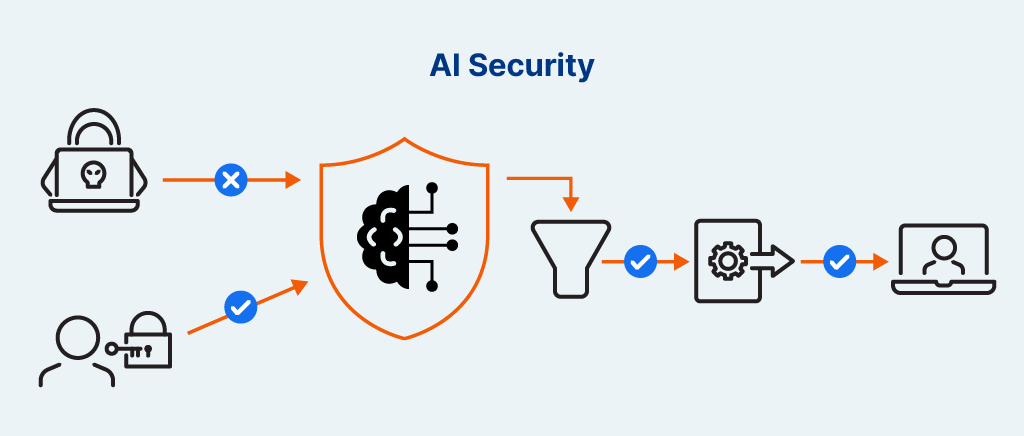

AI security refers to the practices, tools, and frameworks used to protect artificial intelligence systems from threats such as data poisoning, adversarial attacks, model theft, and misuse. This certifies their integrity, reliability, and safe deployment.

What Role Does AI Security Play Within SaaS?

In the world of SaaS, where AI is increasingly powering everything from automation to personalized user experiences, AI security plays a crucial behind-the-scenes role. It starts with protecting the data, making sure customer information used to train or interact with AI models is secure, private, and handled responsibly. But it doesn’t stop there.

AI security also shields models from threats like adversarial attacks or reverse engineering, which can compromise their performance or expose proprietary systems. It ensures that the outputs AI delivers are trustworthy and consistent, which is vital for maintaining user confidence in the platform.

Beyond technical defenses, AI security helps SaaS providers stay compliant with data protection laws and industry standards, reducing legal and reputational risks. Ultimately, it’s about making sure AI within SaaS is not only powerful and innovative but also safe, reliable, and built on a foundation of trust.

How Secure Are General LLMs?

The security of general large language models (LLMs) is still evolving, and while these models are powerful, they’re not without risks.

At their core, LLMs like GPT are trained on vast datasets and designed to generate human-like text. But their openness and flexibility also make them vulnerable. For instance, attackers can try to manipulate inputs to make the model behave in unintended ways, also known as a “prompt injection”. These attacks can lead to the model leaking information, performing unauthorized actions, or bypassing intended safeguards.

Another concern is data privacy. While LLMs don’t “remember” individual conversations unless designed to, there’s ongoing scrutiny over how training data is sourced and whether personal or proprietary data might be embedded in the model. If not properly managed, this could raise compliance and ethical issues.

Then there’s model misuse. Because general LLMs are so good at generating text, they can be exploited to create convincing phishing emails, misinformation, or harmful content. That’s why responsible providers invest heavily in safety layers, monitoring systems, and usage policies.

While general LLMs are secure in many ways, they’re not immune to misconfiguration. Ongoing research, thoughtful guardrails, and clear governance are key to making sure these tools remain both useful and safe.

It’s also important to consider the unique risks posed by open-source LLMs, such as Meta’s LLaMA or DeepSeek. These models can be run without the same safety layers or usage controls implemented by commercial providers. While this openness fosters innovation and transparency, it also makes it easier for malicious actors to fine-tune or deploy these models for harmful purposes without any oversight. As adoption of open-source LLMs grows, so too does the need for community standards, tooling for safer deployment, and shared security practices.

How is AI Secured Within SaaS?

AI within SaaS platforms is secured through a mix of infrastructure protections, responsible AI practices, and platform-specific safeguards.

Isolation and Access Controls. SaaS providers isolate AI models and user data through strict permission settings and tenant separation. For example: AgentForce in Salesforce operates within a customer’s existing Salesforce environment, which means it respects object-level and field-level security already configured. If a support agent doesn’t have access to a field, neither does AgentForce. This prevents unauthorized access to sensitive CRM data, such as patient health records or financial transactions.

Data Governance and Transparency. Platforms ensure that users know how their data is used and allow them to control it. Gemini in Google Workspace (formerly Duet AI) runs on enterprise-grade privacy standards. For enterprise users, Google ensures that data processed through Gemini isn’t used to train public models. Admins can audit AI activity and apply data loss prevention (DLP) rules to prevent sensitive data from being processed.

Fine-Tuned Model Scoping. AI features are usually scoped narrowly to prevent overreach. Instead of deploying a general model everywhere, providers use task-specific models or integrations. Microsoft Copilot in Dynamics 365 uses AI to draft emails or summarize customer tickets, but only within specific modules. It doesn’t have carte blanche access across the entire suite, which reduces attack surface and ensures better security oversight.

Audit Trails and Monitoring. SaaS platforms embed monitoring to track how AI is used and flag anomalies. Salesforce logs all interactions with generative AI tools like Einstein GPT and AgentForce, so admins can review who prompted what, when, and with what data. This helps detect misuse or errors quickly.

Guardrails and Content Filters. AI-generated outputs are filtered to prevent harmful or non-compliant content. Atlassian Intelligence uses AI to summarize project updates and automate tasks in Jira or Confluence, but filters outputs based on company policy settings and includes disclaimers when confidence is low, helping users trust but verify.

AI Discovery: SaaS Apps with AI Embedment

Today, AI is embedded across a wide range of SaaS applications, transforming everything from customer support to threat detection. Tools like Salesforce’s AgentForce, Google Workspace’s Gemini, Microsoft Copilot, and Atlassian Intelligence are leading examples of how AI is woven directly into the SaaS fabric. These tools automate workflows, surface insights, and enhance user productivity, but they also introduce new layers of complexity when it comes to security.

On the positive side, AI is helping SaaS platforms become more secure. For instance, AI security tools can detect anomalies in user behavior, flag misconfigurations, and even automate compliance checks. Google Workspace, for example, uses AI to power threat detection in Gmail, catching phishing attempts in real time. Similarly, Microsoft 365 Defender leverages AI to correlate threats across services like Teams, SharePoint, and Outlook.

However, this embedding also brings new AI security risks. AI models in SaaS apps often have broad access to user data, which can lead to data exposure or model misuse. Some embedded AI assistants may generate content based on user prompts without strong enough filters, raising concerns around misinformation or leakage of sensitive information. And while tools like AgentForce inherit Salesforce’s permissioning model, other SaaS apps may lack such fine-grained control.

As AI continues to proliferate within SaaS, discovery and visibility become essential. Security teams need to identify where AI is embedded, what data it accesses, and how its outputs are used. This helps organizations strike the right balance between innovation and risk, ensuring AI enhances SaaS security rather than becoming a blind spot within it.

Advantages of AI in Security

1. Real-Time Threat Detection. AI can analyze vast amounts of data and detect anomalies or potential threats in real time, making it faster than human analysts. For example, AI can flag unusual login patterns or data exfiltration attempts instantly.

2. Automated Incident Response. AI-driven systems can automatically respond to known threats, such as isolating infected devices or revoking compromised credentials, reducing response times dramatically.

3. Improved Accuracy. Machine learning models can identify complex attack patterns that traditional rule-based systems might miss, reducing false positives and improving detection of sophisticated threats.

4. Scalability. AI can monitor thousands of endpoints, users, and services simultaneously, making it ideal for large, distributed environments like SaaS platforms and cloud infrastructure.

5. Threat Intelligence. AI can process and correlate threat intelligence from multiple sources to predict emerging risks and inform proactive defenses.

6. Behavioral Analysis. AI can establish baselines for normal user behavior and alert security teams when deviations occur. This becomes useful for catching insider threats or compromised accounts.

Disadvantages of AI in Security

1. False Positives & False Negatives. Poorly trained models may either generate too many false alarms or miss real threats, depending on the quality and diversity of training data.

2. Data Dependency. AI requires large volumes of quality data to be effective. Incomplete, biased, or outdated data can lead to inaccurate predictions or blind spots.

3. Vulnerability to Adversarial Attacks. Attackers can manipulate AI models with specially crafted inputs (e.g., adversarial examples) to evade detection or exploit weaknesses in the model.

4. Lack of Explainability. Many AI models operate as “black boxes,” making it difficult for security teams to understand why a certain alert was triggered or action taken. This inevitably poses problems for compliance and trust.

5. Model Drift. Over time, threat landscapes and environments evolve. Without regular updates, AI models can become less effective, known as “model drift.”

6. High Implementation Cost. Deploying and maintaining AI-powered security tools often requires significant investment in infrastructure, talent, and ongoing training.

The Future of AI Security

As AI capabilities accelerate, so do the threats surrounding its security. The future of AI security isn’t just about defending against new attacks; it’s about rethinking how we build, deploy, and govern AI in increasingly interconnected environments. Here are some of the key trends on AI security shaping what comes next:

AI Red Teaming Becomes Standard

AI red teaming (the practice of stress-testing AI systems with simulated attacks) is rapidly becoming a core part of AI development and security assessments. Expect red teams to focus not just on adversarial inputs, but also on social engineering risks, prompt injection, and attempts to extract training data. More organizations will formalize these practices, especially for customer-facing AI tools.

Zero Trust for AI

Just as zero trust revolutionized network security, a similar principle is emerging for AI: don’t trust AI models by default. Verify every access, input, and output. In the future, every AI interaction (e.g., an LLM generating a support ticket or recommending financial advice) may require validation, logging, and policy enforcement before it affects real data or systems.

Secure Model Sharing and Federated AI

As businesses increasingly collaborate on AI projects across vendors and platforms, secure model sharing will be a critical concern. Federated learning, where models are trained on decentralized data without moving it, will help reduce risk while maintaining performance and privacy, especially in healthcare, finance, and public sector use cases.

LLM-Specific Security Frameworks

New frameworks tailored for large language models are emerging. These will go beyond traditional application security to include safeguards like:

- Output moderation

- Context scoping

- Role-based prompt controls

- Real-time abuse detection

- Expect industry groups (like OWASP or NIST) to formalize these frameworks for use across the public and private sectors.

AI-Enabled SOCs and Automated Threat Response

Security Operations Centers (SOCs) of the future will run with AI at the core, not just for alert triage but for decision-making and automated response. AI agents will handle repetitive security tasks, correlate threat intelligence in real time, and even simulate attacker behavior to improve defenses continuously.

Proactive AI Governance

Finally, governance will become a proactive discipline. Instead of bolting on risk controls after an incident, organizations will bake in security and ethics from day one of an AI project. Tools that score model risks, track data lineage, and document decision logic will become standard in enterprise AI pipelines.

Does AppOmni Offer an AI Security Solution?

Yes, AppOmni has introduced an AI-driven security solution called AskOmni, designed to enhance SaaS Security Posture Management (SSPM). AskOmni serves as a generative AI assistant that helps security teams identify and remediate SaaS security issues more efficiently.

Key Features of AskOmni:

- Natural Language Interaction: Allows administrators to use conversational queries to understand and manage SaaS security risks.

- Contextual Insights: Provides tailored guidance on security observations, helping users comprehend the relevance of issues within their specific environment.

- Actionable Recommendations: Offers clear instructions for addressing security concerns, from threat hunting to deep analysis.

- Integration with Security Tools: Functions as a Model Context Protocol (MCP) server, enabling seamless integration with SIEM, XDR, IAM, and other security platforms for comprehensive threat investigations

- 24/7 Assistance: Provides continuous support for questions, research, and remediation efforts.

- Expert-Trained AI: Powered by SaaS-specific models trained on real-world configurations, misconfigurations, and remediation practices, AskOmni leverages insights from SaaS security experts and environments to deliver relevant, high-impact guidance instantly.

By incorporating AskOmni into their security operations, organizations can leverage AI to gain deeper insights into their SaaS environments, streamline remediation processes, and enhance overall security posture.

Learn more about AppOmni’s AI security solution here.