AI is no longer a future initiative; it is already woven into the SaaS platforms your business depends on. Copilots draft emails, agents trigger workflows, and API-driven integrations move data at machine speed. These capabilities create tremendous value, but they also behave exactly like high-powered users and SaaS applications rolled into one.

That makes the security conversation refreshingly simple: Secure AI exactly the way you already secure SaaS. In reality, securing AI is the same set of principles security teams use every day for SaaS Security Posture Management (SSPM).

| SSPM Question | AI Translation |

| Who has access? | Which copilots, agents, and service accounts are connected? |

| What can they do? | What scopes, tokens, and privileges have been granted? |

| Are configurations secure? | Are GPT endpoints, model settings, and retention policies hardened? |

| Are we watching behavior? | Can we detect mass exports or unexpected prompt patterns in real time? |

| Can we prove compliance? | Do we have evidence of least-privilege and Zero Trust for every AI identity? |

If your current SSPM program answers these questions for people and SaaS apps, you’re 80 percent of the way to governing AI.

Treat AI like the operator it is

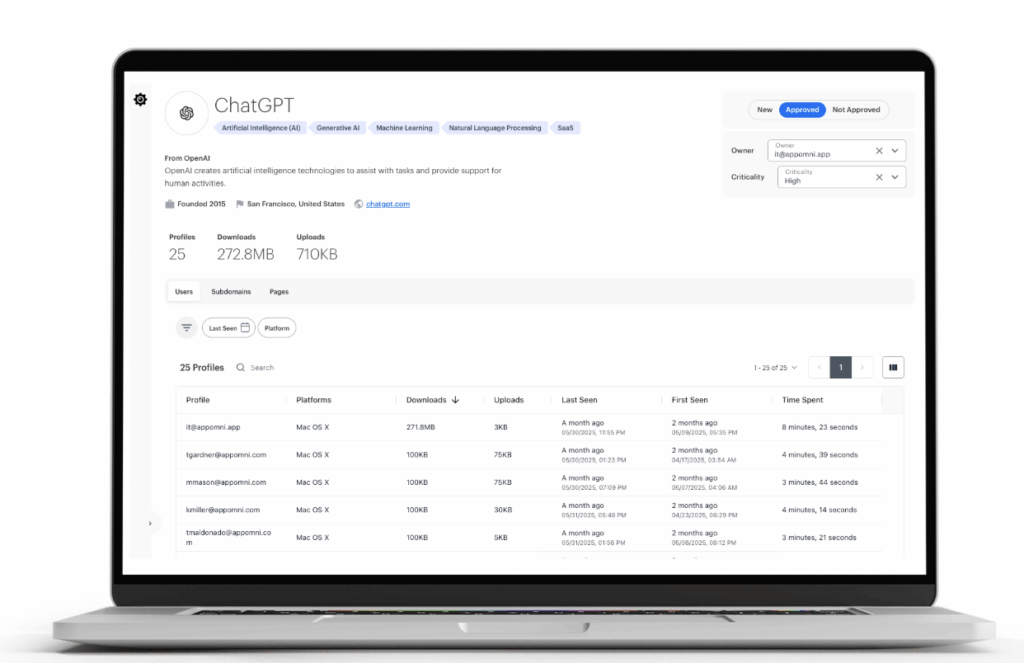

- Inventory and discover AI, everywhere

- Surface copilots embedded in Microsoft 365, agents connected by OAuth, AND standalone GenAI apps in minutes.

- Map the data AI can touch and the human identities that installed them.

- Enforce least privilege from day one

- Apply AI access controls with the same role-scoping and approval workflows you use for new human users.

- Issue AI integrations with the smallest set of permissions they need. No more, no less.

- Continuously monitor for anomalies

- Flag risky AI behaviors like first-ever mass downloads, model-parameter changes, or unapproved fine-tune jobs.

- Stream activities into your SIEM/SOAR for a single source of truth.

- Develop AI governance

- Secure configurations and detect stale or over-scoped tokens before attackers.

- Audit model settings, data-retention flags, and callback URLs just as you audit SSO or MFA.

- Document and demonstrate compliance

- Generate artifacts that show auditors every AI identity, its permissions, and its activity trail.

The upside of a single SaaS security strategy

Keeping AI inside your existing SSPM motion avoids tool sprawl, budget creep, and duplicated effort. More importantly, it delivers consistent Zero Trust across humans, apps, and machine operators alike. The process of security has not changed, just the inputs.

How AppOmni secures AI in SaaS

Ready to see how AppOmni extends proven SaaS controls to the AI layer? Download the AI Security Toolkit for a closer look at discovery workflows, least-privilege enforcement, and real-time anomaly detection purpose-built for AI.

AI in SaaS is only going to get more common. If we treated it like an entirely separate domain, we’d be drowning in complexity and tool sprawl.

Keep AI security simple

Adopting AI security doesn’t need to be a new category. It’s not some radical shift. It’s just the logical next step in how we secure SaaS.

If your SSPM program can already:

- Discover unmanaged apps

- Lock down access

- Monitor non-human identities

- Detect weird behavior

…then you’re more than ready to handle AI.

You don’t need new tools. You need a smarter way to use the ones you’ve got.

And if you want to see how AppOmni helps folks do that? Download the AI Security Toolkit.

Related Resources

-

How Using AI Simplifies SaaS Security: Smarter Investigations Start with AskOmni

AskOmni prompts simplify SaaS security with AI. AppOmni helps teams act fast.

-

IT Pros ‘Extremely Worried’ About Shadow AI: Report

“The biggest risk with shadow AI is that the AI application has not passed through a security analysis as approved AI tools may have been,” explained Melissa Ruzzi, director of AI at AppOmni.

-

The Death of SaaS and the Rise of Agentic AI

In this episode of All Aboard, ConductorOne Co-founder Alex Bovee sits down with AppOmni CTO Brian Soby and investor Pramod Gosavi of Blumberg Capital to unpack a provocative question: is SaaS dead?